What “Black Mirror” Taught Us About Surveillance and Systemic Control

Black Mirror has never been science fiction in the usual sense. It is allegory. Each episode distills the anxieties of a society where data, technology, and power converge. The question it forces us to ask is not “could this happen?” but “hasn’t it already?”

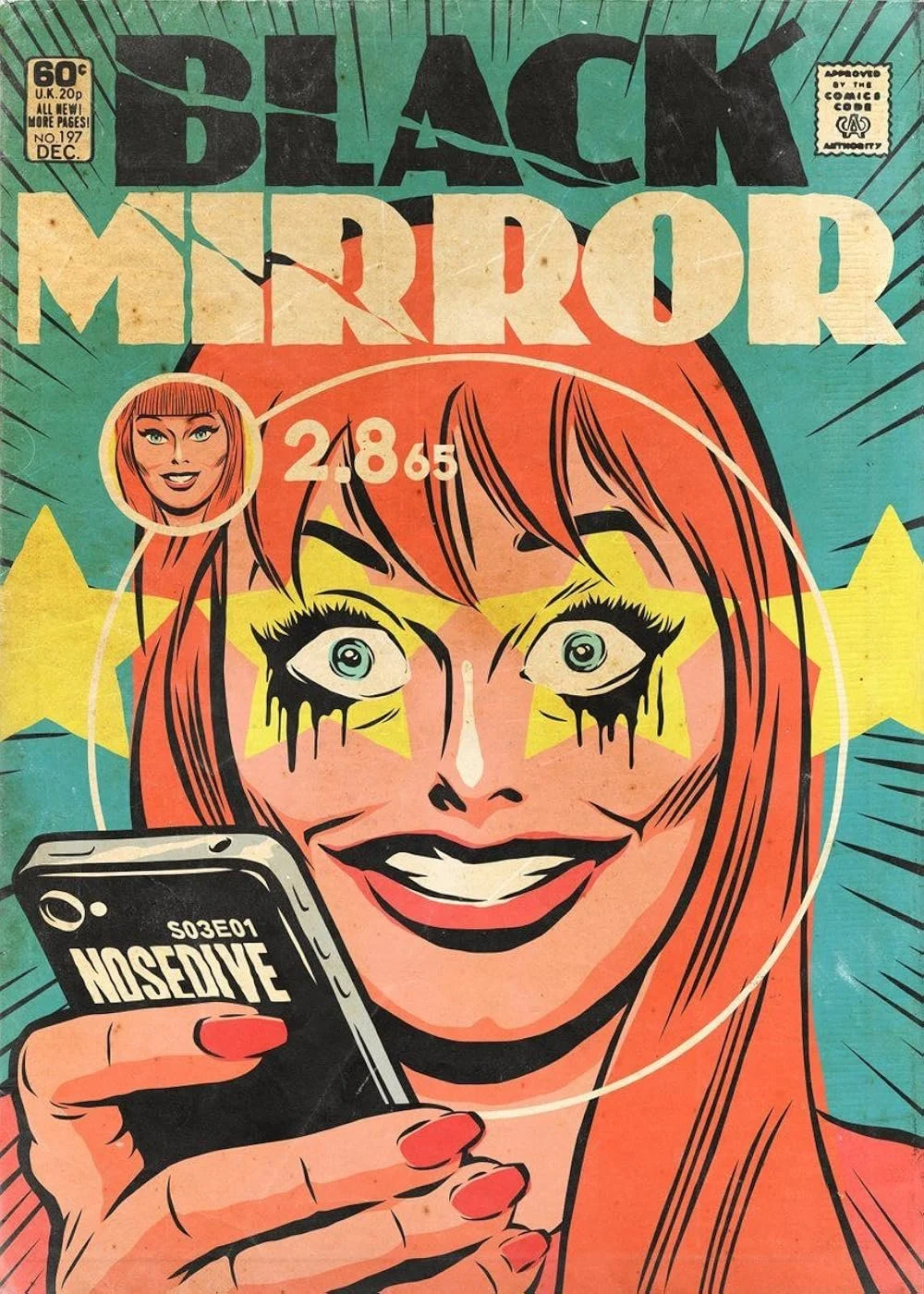

1. Nosedive and the Social Credit Reality

In Nosedive, every interaction contributes to a public rating that dictates housing, travel, and opportunity. This mirrors China’s social credit system, where citizens are scored on behavior, financial reliability, and even associations. A low score can mean losing access to flights, trains, or jobs. Surveillance here is not hidden but normalized, woven into daily life until compliance is the only survival strategy.

Black Mirror Episode: Nosedive follows a woman whose entire life depends on maintaining a high social rating. Every interaction affects her housing, travel, and job prospects.

Real Life Parallel: China’s Social Credit System scores citizens on behavior, spending, and associations. Low scores can mean loss of travel privileges, internet restrictions, or blocked opportunities. Surveillance becomes normalized through social compliance.

Both expose how constant evaluation reshapes human behavior until authenticity becomes a liability.

2. Arkangel and the Myth of Safety

Arkangel follows a mother who implants surveillance tech in her daughter to track and filter her experiences. The intention is safety. The outcome is trauma. Real-world echoes are found in parental tracking apps and school surveillance software in the United States, where students’ keystrokes, browsing histories, and even facial expressions are monitored. While sold as protection against danger, the systems often disproportionately punish marginalized youth, creating a cycle of distrust and control.

Black Mirror Episode: Arkangel centers on a mother who implants surveillance tech in her child to monitor, filter, and control her experiences supposedly in the name of safety.

Real Life Parallel: Parental tracking apps and school surveillance systems across the U.S. monitor students’ browsing, emails, and even facial expressions. The data is often used to punish rather than protect, disproportionately affecting marginalized youth.

Control disguised as protection always costs more than it claims.

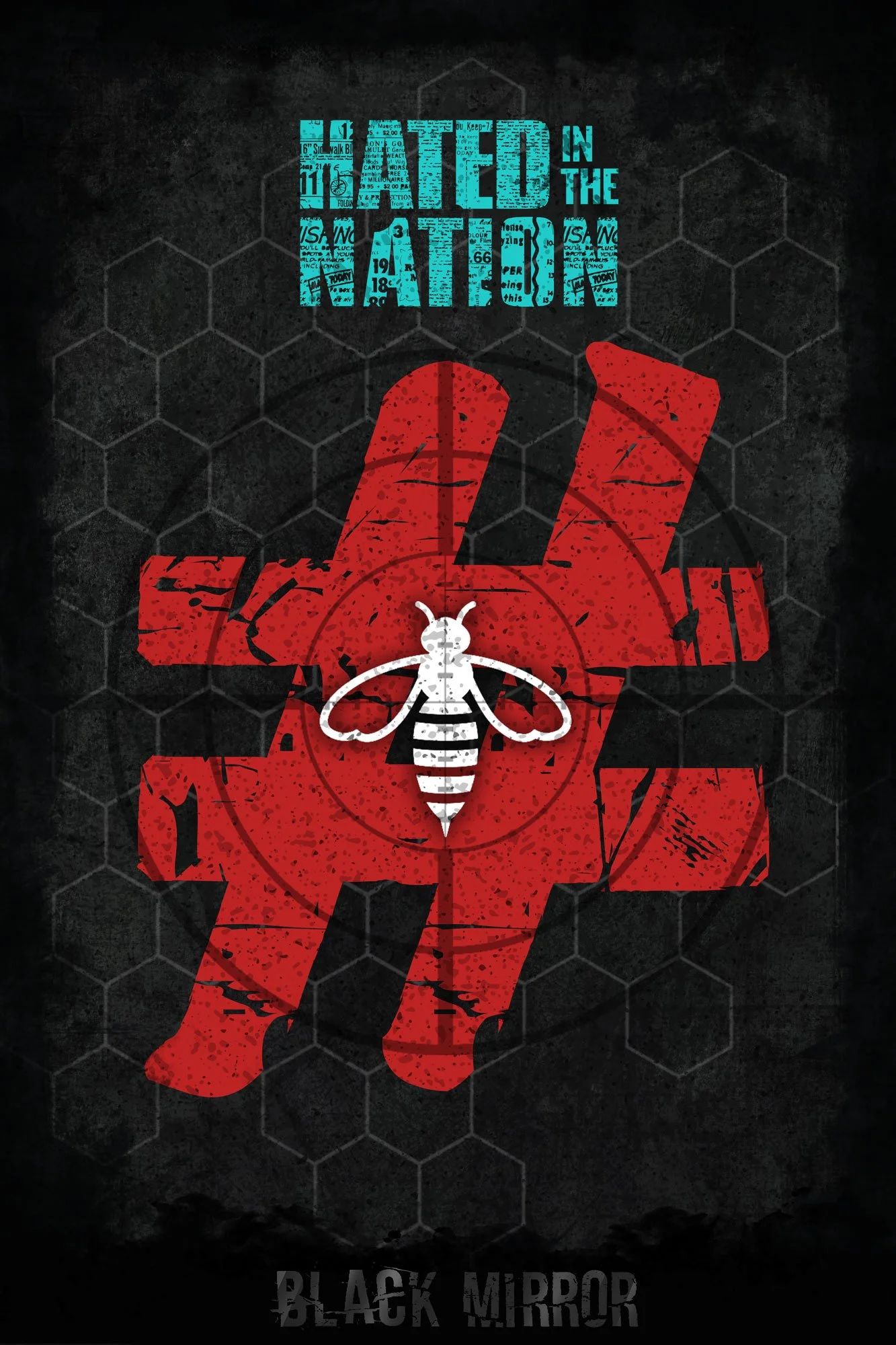

3. Hated in the Nation and Algorithmic Amplification

In this episode, social media outrage is weaponized, turning public shaming into literal death sentences. Today’s analog is not mechanical bees but algorithmic amplification. Platforms like Facebook and X (Twitter) feed outrage to maximize engagement, regardless of truth. The result: mobs form, reputations collapse, and sometimes lives are lost. Surveillance here takes the form of digital memory with every post cataloged, every misstep immortalized.

Black Mirror Episode: In Hated in the Nation, online outrage leads to real-world death sentences as people weaponize hashtags without accountability.

Real Life Parallel: Social media algorithms on platforms like X (Twitter), Facebook, and TikTok amplify outrage to drive engagement. Public shaming becomes a currency of attention, and mistakes become permanent digital scars.

Black Mirror’s swarm of robotic bees has become our swarm of algorithmic mobs.

4. White Bear and Predictive Policing

The protagonist in White Bear is trapped in a cycle of punishment designed for public consumption. Modern policing echoes this in predictive policing tools like PredPol, which use past arrest data to forecast crime. The flaw: the data reflects biased policing practices, so the cycle replicates itself. Communities already over-policed remain targeted, and “justice” becomes a feedback loop of systemic control.

Black Mirror Episode: White Bear traps its protagonist in a punishment loop performed for the entertainment of the public. Her suffering is content.

Real Life Parallel: Predictive policing algorithms like PredPol rely on biased arrest data to forecast crime, repeatedly targeting the same neighborhoods. The cycle of over-policing continues, disguised as objective technology.

Punishment becomes performance when systems automate judgment.

Closing Thought

Black Mirror’s genius lies in how eerily familiar its stories feel. Surveillance doesn’t always wear a uniform; it often arrives as convenience, innovation, or protection. From social scoring to predictive policing, our systems have already blurred the line between safety and subjugation.

The mirror doesn’t show us what’s next. It shows us what we’ve already accepted.